Five Questions That you must Ask About Deepseek

페이지 정보

본문

DeepSeek-V2 is a large-scale model and competes with different frontier methods like LLaMA 3, Mixtral, DBRX, and Chinese models like Qwen-1.5 and DeepSeek V1. Others demonstrated simple however clear examples of superior Rust usage, like Mistral with its recursive approach or Stable Code with parallel processing. The example highlighted using parallel execution in Rust. The instance was comparatively straightforward, emphasizing simple arithmetic and branching using a match expression. Pattern matching: The filtered variable is created by using pattern matching to filter out any destructive numbers from the enter vector. In the face of disruptive technologies, moats created by closed supply are short-term. CodeNinja: - Created a operate that calculated a product or distinction primarily based on a situation. Returning a tuple: The operate returns a tuple of the two vectors as its result. "DeepSeekMoE has two key ideas: segmenting experts into finer granularity for increased knowledgeable specialization and extra correct knowledge acquisition, and isolating some shared specialists for mitigating data redundancy amongst routed consultants. The slower the market strikes, the more an advantage. Tesla nonetheless has a first mover benefit for positive.

You must understand that Tesla is in a greater place than the Chinese to take benefit of recent strategies like those used by DeepSeek. Be like Mr Hammond and write extra clear takes in public! Generally thoughtful chap Samuel Hammond has printed "nine-5 theses on AI’. This is essentially a stack of decoder-only transformer blocks utilizing RMSNorm, Group Query Attention, some type of Gated Linear Unit and Rotary Positional Embeddings. The current "best" open-weights fashions are the Llama 3 collection of models and Meta appears to have gone all-in to train the very best vanilla Dense transformer. These models are better at math questions and questions that require deeper thought, so that they usually take longer to answer, nevertheless they may present their reasoning in a extra accessible fashion. This stage used 1 reward model, trained on compiler suggestions (for coding) and floor-truth labels (for math). This enables you to check out many models rapidly and successfully for a lot of use instances, such as DeepSeek Math (model card) for math-heavy tasks and Llama Guard (mannequin card) for moderation tasks. A variety of the trick with AI is figuring out the best approach to prepare these items so that you've got a job which is doable (e.g, playing soccer) which is on the goldilocks level of problem - sufficiently difficult it's good to come up with some good issues to succeed at all, however sufficiently simple that it’s not unattainable to make progress from a cold begin.

You must understand that Tesla is in a greater place than the Chinese to take benefit of recent strategies like those used by DeepSeek. Be like Mr Hammond and write extra clear takes in public! Generally thoughtful chap Samuel Hammond has printed "nine-5 theses on AI’. This is essentially a stack of decoder-only transformer blocks utilizing RMSNorm, Group Query Attention, some type of Gated Linear Unit and Rotary Positional Embeddings. The current "best" open-weights fashions are the Llama 3 collection of models and Meta appears to have gone all-in to train the very best vanilla Dense transformer. These models are better at math questions and questions that require deeper thought, so that they usually take longer to answer, nevertheless they may present their reasoning in a extra accessible fashion. This stage used 1 reward model, trained on compiler suggestions (for coding) and floor-truth labels (for math). This enables you to check out many models rapidly and successfully for a lot of use instances, such as DeepSeek Math (model card) for math-heavy tasks and Llama Guard (mannequin card) for moderation tasks. A variety of the trick with AI is figuring out the best approach to prepare these items so that you've got a job which is doable (e.g, playing soccer) which is on the goldilocks level of problem - sufficiently difficult it's good to come up with some good issues to succeed at all, however sufficiently simple that it’s not unattainable to make progress from a cold begin.

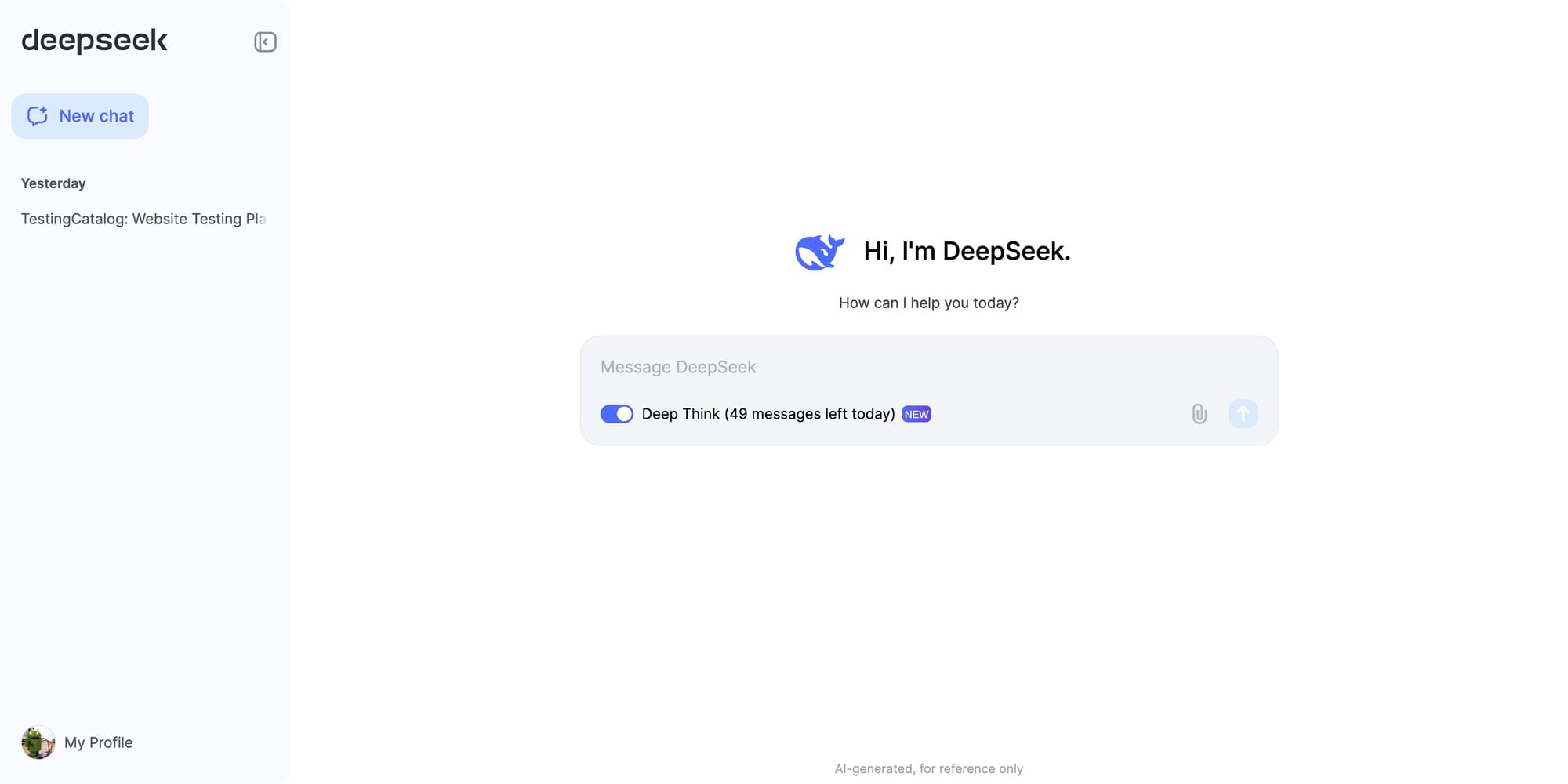

Please admit defeat or decide already. Haystack is a Python-only framework; you possibly can install it utilizing pip. Get began by installing with pip. Get began with E2B with the following command. A year that began with OpenAI dominance is now ending with Anthropic’s Claude being my used LLM and the introduction of several labs which can be all making an attempt to push the frontier from xAI to Chinese labs like DeepSeek and Qwen. Despite being in growth for a couple of years, DeepSeek seems to have arrived almost overnight after the release of its R1 mannequin on Jan 20 took the AI world by storm, mainly because it presents performance that competes with ChatGPT-o1 with out charging you to use it. Chinese startup free deepseek has constructed and launched DeepSeek-V2, a surprisingly highly effective language model. The paper presents the CodeUpdateArena benchmark to check how properly massive language models (LLMs) can update their information about code APIs which are continuously evolving. Smarter Conversations: LLMs getting higher at understanding and responding to human language. This exam includes 33 issues, and the model's scores are determined by human annotation.

They don't because they aren't the chief. DeepSeek’s models can be found on the web, by way of the company’s API, and by way of cellular apps. Why this issues - Made in China can be a thing for AI models as nicely: DeepSeek-V2 is a very good mannequin! Using the reasoning data generated by DeepSeek-R1, we high-quality-tuned several dense models which are broadly used in the research group. Now I have been utilizing px indiscriminately for every little thing-pictures, fonts, margins, paddings, and extra. And I'll do it again, and once more, in every undertaking I work on still utilizing react-scripts. This is removed from good; it's just a simple challenge for me to not get bored. This showcases the flexibility and power of Cloudflare's AI platform in generating complicated content based on easy prompts. Etc and so on. There could literally be no benefit to being early and each benefit to waiting for LLMs initiatives to play out. Read more: The Unbearable Slowness of Being (arXiv). Read extra: A Preliminary Report on DisTrO (Nous Research, GitHub). More info: DeepSeek-V2: A robust, Economical, and Efficient Mixture-of-Experts Language Model (DeepSeek, GitHub). SGLang also helps multi-node tensor parallelism, enabling you to run this model on multiple network-linked machines.

If you cherished this short article and you would like to acquire more data about ديب سيك kindly stop by our own webpage.

- 이전글Cat Flap Cost Near Me 25.02.01

- 다음글5 Places To Search For A Deepseek 25.02.01

댓글목록

등록된 댓글이 없습니다.