Cats, Canine and Deepseek

페이지 정보

본문

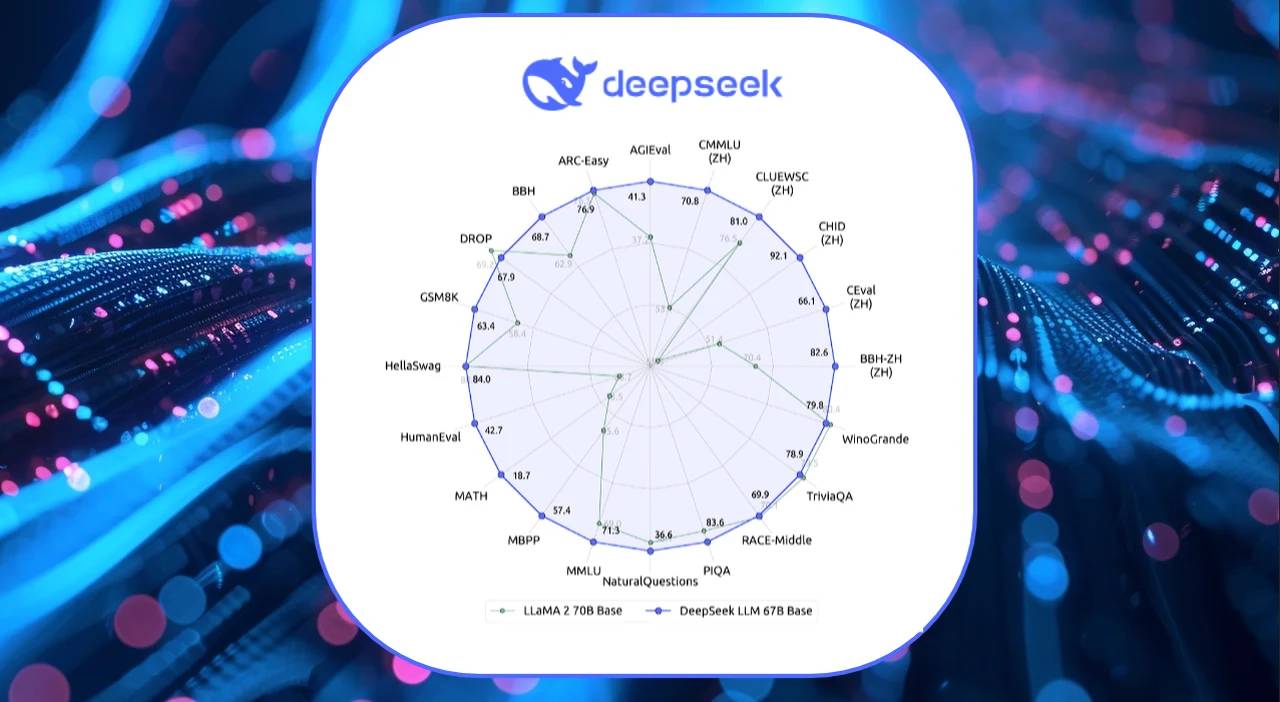

All different rights not expressly authorized by these Terms are reserved by DeepSeek, and before exercising such rights, you must receive written permission from DeepSeek. 3.2 When using the Services supplied by DeepSeek, users shall comply with these Terms and adhere to the rules of voluntariness, equality, fairness, and good faith. DeepSeek, an organization primarily based in China which goals to "unravel the mystery of AGI with curiosity," has launched DeepSeek LLM, a 67 billion parameter mannequin trained meticulously from scratch on a dataset consisting of 2 trillion tokens. Their outputs are primarily based on an enormous dataset of texts harvested from internet databases - a few of which include speech that's disparaging to the CCP. 3.3 To meet authorized and compliance requirements, DeepSeek has the proper to make use of technical means to evaluation the habits and data of users using the Services, Free Deepseek Online chat together with but not limited to reviewing inputs and outputs, establishing danger filtering mechanisms, and creating databases for unlawful content material options. 3) Engaging in actions that infringe on mental property rights, trade secrets, and other violations of business ethics, or utilizing algorithms, information, platforms, and so forth., to implement monopolistic and unfair competitors behaviors. If you do not settle for the modified phrases, please cease using the Services immediately.

All different rights not expressly authorized by these Terms are reserved by DeepSeek, and before exercising such rights, you must receive written permission from DeepSeek. 3.2 When using the Services supplied by DeepSeek, users shall comply with these Terms and adhere to the rules of voluntariness, equality, fairness, and good faith. DeepSeek, an organization primarily based in China which goals to "unravel the mystery of AGI with curiosity," has launched DeepSeek LLM, a 67 billion parameter mannequin trained meticulously from scratch on a dataset consisting of 2 trillion tokens. Their outputs are primarily based on an enormous dataset of texts harvested from internet databases - a few of which include speech that's disparaging to the CCP. 3.3 To meet authorized and compliance requirements, DeepSeek has the proper to make use of technical means to evaluation the habits and data of users using the Services, Free Deepseek Online chat together with but not limited to reviewing inputs and outputs, establishing danger filtering mechanisms, and creating databases for unlawful content material options. 3) Engaging in actions that infringe on mental property rights, trade secrets, and other violations of business ethics, or utilizing algorithms, information, platforms, and so forth., to implement monopolistic and unfair competitors behaviors. If you do not settle for the modified phrases, please cease using the Services immediately.

DeepSeek reveals that numerous the trendy AI pipeline isn't magic - it’s constant beneficial properties accumulated on careful engineering and determination making. It’s all quite insane. Most commonly we saw explanations of code outdoors of a remark syntax. Specifically, in the course of the expectation step, the "burden" for explaining every knowledge level is assigned over the consultants, and throughout the maximization step, the specialists are trained to improve the reasons they obtained a excessive burden for, while the gate is trained to improve its burden task. The mixture of consultants, being much like the gaussian mixture model, can be educated by the expectation-maximization algorithm, similar to gaussian mixture fashions. After signing up, you may be prompted to finish your profile by adding extra details like a profile image, bio, or preferences. "We imagine formal theorem proving languages like Lean, which supply rigorous verification, characterize the way forward for arithmetic," Xin said, pointing to the rising pattern within the mathematical community to make use of theorem provers to verify advanced proofs.

What does this imply for the longer term of labor? The paper says that they tried applying it to smaller models and it did not work practically as effectively, so "base models have been bad then" is a plausible explanation, however it is clearly not true - GPT-4-base is probably a generally better (if costlier) mannequin than 4o, which o1 is based on (may very well be distillation from a secret larger one though); and LLaMA-3.1-405B used a considerably similar postttraining course of and is about nearly as good a base mannequin, but is not competitive with o1 or R1. "the mannequin is prompted to alternately describe a solution step in pure language after which execute that step with code". Building on evaluation quicksand - why evaluations are always the Achilles’ heel when coaching language fashions and what the open-supply neighborhood can do to improve the state of affairs. That is significantly less than the $a hundred million spent on coaching OpenAI's GPT-4.

- 이전글Five Killer Quora Answers On Windows & Doors Company 25.02.16

- 다음글인생의 퍼즐: 어려움을 맞닥뜨리다 25.02.16

댓글목록

등록된 댓글이 없습니다.